THIS BLOG IS OUT OF DATE. For a more recent analysis see this blog

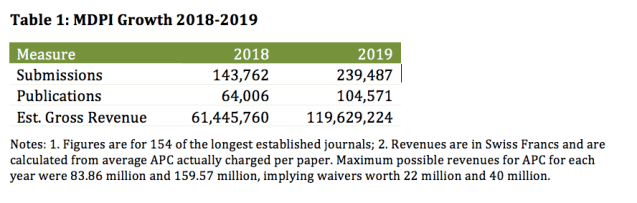

In a previous blog (published December 2019) I explored the performance and changes of the MDPI journals, examining their growth up to the end of 2018. Since I wrote that blog, data for 2019 are now available – and they are more remarkable than before (Table 1). Submissions in 2018 were over 140,000. In 2019 they were just under 240,000. Over 64,000 papers were published in 2018; in 2019 over 100,000. Estimated gross revenues (see note below Table 1) have increased by nearly 60 million Swiss francs. A downloadable PDF of this blog and the source data are available at the end of the document.

In this blog I reflect on what these trends mean for the arguments of my last blog – specifically, does growth demonstrate signs of vanity publishing? I also reflect on the responses to the first open letter that I wrote to the MDPI. The headline findings are that I believe that the growth has continued at the same rate (if not greater) because the journals provide a service that increasing numbers of academics find useful. At the same time the experience of publishing with an working for these journals remains uneven.

Figures are derived from a subset of the more established journals for which data are available (N=154). Not all 218 journals in the portfolio are included because the more recent journals do not have sufficient data to present.

A. Trends in publications 2015-2019.

1. The sustained growth in submissions and publications are shown in Figure 1 below. They speak for themselves. It is worth, however, highlighting the dramatic financial returns MDPI is realising (Table 2). I would be surprised if there were many other sectors that can demonstrate these levels of sustained growth.

- The growth, and revenues, have not been achieved by lowering rejection rates. The journals with the lowest rejection rates have continued to count for only a small minority of publications and fees (Table 3 and Table 4). Indeed the number of journals with the lowest rejection rates has declined, and publications in them also dropped. 69% of published papers and 73% of fees derive from journals with rejection rates of over 50% (Table 5). These rejection rates are still not high compared to the strictest journals managed by other publishing houses (with rates of over 95%).

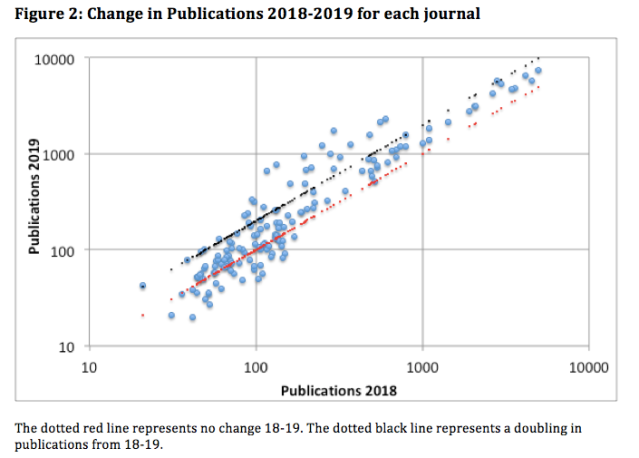

- Growth in 2019 was driven by already popular journals becoming more popular. The largest journals have continued to thrive, but there has also been a surge in submissions to the smaller journals (publishing between 100 and 1000 papers per year). A notable minority of these have seen publications more than double compared to 2018. The journals which show declines tend to be the smallest.

- MDPI journals are becoming ever increasingly mainstream, 137 are now covered by the Web of Science (up from 85 in 2016), 134 by Scopus (up from 60 in 2016) and 70 by SCIE (up from 27 in 2016).

In this respect my conclusions in the previous open letter remain unchanged. Despite doubts to the contrary, the success of MDPI journals cannot be explained by lax standards. They have grown because submissions to them have grown. They offer a fast service, relatively cheap, open access publication, and their reputation, as measured in citation data and listings on indices, continues to grow.

B. Responses to the first open letter.

There was considerable interest in the first letter I wrote, and it tended to fall into three camps. First, a number of observers were surprised. They, like me, were not expecting the results I produced.

The second camp proved more critical. These responses came from authors who had found the experience of managing special issues problematic because too many papers were published in them. They surmised, correctly, that I have never lead a special issue in an MDPI journal. I was directed to online discussions about the journals’ flawed processes (here and here) which dismiss them inter alia as ‘a predatory outfit’. Others pointed me to the protest resignation of one journal’s editorial board, and the temporary black-listing of the MDPI on Beal’s list.

I have found these engagements really useful. But they have not changed my mind about my conclusions. This is partly because a number of other editors and authors also got in touch with precisely the opposite sentiments. They were the third camp. They valued working with and publishing in MDPI. I was also not persuaded by all the criticisms. The MDPI were indeed on Beal’s list, but they are now removed from it. They are not found on any predatory publishing black list, and in fact listed on the DOAJ ‘white-lists’ (see here and here). One set of editors did resign, but there are over 200 other journals where the leadership are more content.

When I disagree with the critics it is not because I deny the validity of their experiences. It is rather that I do not think that this is the only story that can be told about MDPI. There have clearly been a series of problematic encounters between researchers and these journals. But I cannot see how these justify the conclusion, even on twitter, that therefore all the journals are flawed. These individual cases of bad practice do not amount to any systematic refutation of the data I have presented above. If these experiences were the general rule then why has there been this extraordinary increase in demand for publication that appears to derive from diverse research communities themselves? I was also concerned that one of the negative write-ups of MDPI journals was tinged with racism.

However while the critics do no persuade me, some of the criticisms certainly land. Most seriously there have been recent cases of inappropriate allocation policies of APC waivers (see here and here), in which journal managers have deliberately excluded authors from the global South. Full kudos to WASH colleagues for drawing attention to this. Because they did so MDPI central management apologised and reversed the journal’s decision.

In a recent tweet Arun Agrawal, who serves as editor of World Development, listed a series of complaints that render the publishing house problematic. First there is the problem of unwanted emails. In case anyone from MDPI is reading this then here are a couple of examples of unwanted emails which I have protested and which very few academics ever want to receive. Reminders to join Editorial Boards with already hundreds of board members are also problematic.

Then Arun questioned the lack of service to academic societies. MDPI reports that it works with 95 academic and professional societies. It is not clear however what financial relationships are involved. So Arun may well have a point, but then the same is true for many publishing houses. Are we happy for example, with the sums that Elsevier gives back to academia? How can we know at all what those sums are? There is a general lack of transparency across publishing houses as to what they give back to the academe which provides them with so many free papers to publish.

Finally, I found this comment of Arun’s particularly interesting: ‘remarkably high acceptance rates are major problems in @MDPIOpenAccess model. They are freeriders – If we all did same, quality would plummet.’

As I observed in my previous blog the speed of the MDPI review and revision process will make it easier for mistakes to happen. Median time from submission to publication is now 39 days. It is strange that MDPI has not moved to set up a more exclusive journal, such as a ‘best of’ format which promotes the best papers across its journals. It is also strange that it has not sought to limit the size of some of its largest journals. The more papers you publish, the more mistakes are likely.

However I do not believe that high rejection rate journals are policing quality alone. The history of academic is one of proliferating journals. New ones are set up by new epistemic communities because they cannot get past the gatekeepers of existing journals, or because existing journals do not focus enough on the discussions and issues that new schools of thought want to investigate. Journals, in short, do not just police quality. They police fashion and trends. MDPI’s model is to eschew all questions of fashion, scope or significance. It will publish anything that can get past its peer review. It is up to academic communities to determine how interesting and important these publications are.

This means that the journals are more likely to contain work that is rather inconsequential – and unfashionable. They will house fewer prestigious findings. But then it seems to me unreasonable to set up the upper ends of a hierarchy and dislike the lower ends from establishing themselves. Journals like those the MDPI house are the logical consequence of a broader system of hierarchical publishing prestige. They are not free-riders on the system. They are a corollary of competitive, prestige-driven, restlessly energetic researchers who will not believe that that rejection means that their work is unpublishable. The explosive growth of these journals was, in some respects, waiting to happen.[1]

As Universities grow in number and size globally, as researchers proliferate, and as we continue to insist that our work is not as boring and irrelevant as everyone else finds it, so it will become harder for existing journals and their epistemic communities to contain our enthusiasms and energies. This, I suspect, explains some of the demand for MDPI journals.

The success of their model does not, for me, invalidate the work they contain. Instead it poses a series of questions about how MDPI has coped with its own success. As Delia Mihaila (MDPI’s CEO) reply to my first blog indicates (at the bottom of this page), their growth has required hiring large numbers of new staff simply to process the MSes that the demand has generated. But learning how to do that, and the mores of dealing with papers and reviewers, is hard.

So the questions I would like to understand are:

- How does the back-offices of MDPI cope with these dramatic increases?

- How are standards maintained, how do they learn from their mistakes?

- What best practices do they cultivate and how?

- Where has the pursuit of volume compromised quality?

- What internal management mechanisms are producing so many unwelcome emails and such questionable APC waiver decisions?

If we could explore these questions I think it would take us into more interesting territory than questioning the validity of these journals’ existence. MDPI’s growth and size is bound to be making other academic publishing houses, that we already know are seriously problematic, take note of their practices. It is possible that MDPI’s success could become a means of leveraging progressive change.

For instance it could raise standards of transparency. MDPIs practice of accessibly publishing rejection rates and APC charges for each journal is not standard practice in the sector. It should be. As I have urged before it could make a standard practice of publishing the APC revenues received and waivers given for each journal.

And finally MDPI’s success is worth understanding because it does pose a challenge. One relatively small company has gone from publishing obscurity (26 journals and 2000 papers per year in 2009 ) to over 200 journals and over 100,000 publications in just a decade. And this has demonstrated considerable revenue raising potential. What would happen if University libraries and academics were to organise themselves into a similarly powerful operation, but one in which all the revenues were returned to the research communities which produced them?

Dan Brockington,

Glossop, 24th July 2020

Methods

I copied data for submissions and publications for 154 journals from the MDPI website that began business on or before 2016 and which handled more than 10 papers in that year.

I took APC charges from the website but have had to estimate likely charges in some instances where APC for early years were unavailable. In 2018 and 2019 APC changed every 6 months. I have therefore calculated average APC for the year and applied that average to all papers published in that year. This is inaccurate as the lower fees apply to papers published in the first half the year, and higher fees in the second half. Nevertheless it provides a satisfactory short hand in the absence of better data.

The raw data I have used are available here.

A PDF of this blog is available here.

[1] I should add that I have only the highest respect for Arun. The fact that I have repeatedly failed to write anything good enough for World Development in recent years, despite multiple attempts, is entirely co-incidental to my argument here 😉

Pingback: An Open Letter to MDPI publishing | Dan Brockington

«MDPI’s model is to eschew all questions of fashion, scope or significance. It will publish anything that can get past its peer review. It is up to academic communities to determine how interesting and important these publications are.»

This seems unquestionably good to me! It was also the original promise of PLOS One. Nowadays only the proponents of post-publication review and overlay journals seem to keep bearing this flag consistently.

Personally, whenever I do some research for informative purposes (e.g. for Wikipedia articles or to give introductory material to a friend or in an online conversation), I find that I drown in rubbish at Elsevier’s properties while at MDPI often a well-written and well-edited articles comes up, which may be unfashionable and unpretentious but just does its job.

MDPI also seems to have a more modern software pipeline, compared to publishers like Elsevier which still live in the early 1990s when PDF had just started spreading. It would be interesting to hear from authors whether they experience less frustration with MDPI’s editing, compared to competitive journals which routinely force authors to spend many hours correcting publisher-introduced errors.

Pingback: Guest Post – MDPI's Remarkable Growth - The Scholarly Kitchen

Dear Dan, this seems like an old blog post but I came across it only today, coming from here https://scholarlykitchen.sspnet.org/2020/08/10/guest-post-mdpis-remarkable-growth/

I have developed an interest in MDPI, and scraped their website to understand how many Special Issues they are running (I was getting ~1 invitation a week to edit an SI in various journals of theirs). The result of my data collection is here: https://github.com/paolocrosetto/MDPI_special_issues

In a nutshell: the number of SIs at MDPI journals is growing exponentially. So much so that 35 journals have more than 1 SI *per day* and Sustainability has 8.7 special issue per day, Sat and Sun included.

I tend to think of this exponential growth in Special Issues as a predatory practice. Quality depends on the guest editors only, and given the amount of spam I receive from MDPI — and I am mr. No-one in my field — it looks like anyone could be a guest editor. I find it hard to come up with an explanation other than predatory practices for such a strategy; and impossible to believe that there is quality material for 3500 Special Issues on sustainable cities in 2021 alone.

I’d be happy to hear your thoughts about this.

Paolo

In 2015, Martyn Rittman from MDPI expressed his believe “that the majority of scholars are able to differentiate reputable publishers from those with questionable practices” (https://www.frontiersin.org/…/10…/fnbeh.2015.00201/full). I hope they are.

See https://www.facebook.com/meehkal/posts/10225656872838310

Pingback: Is MDPI a predatory publisher? – Paolo Crosetto

Pingback: MDPI Journals: 2015-2020 | Dan Brockington